Predictive analytics has its origins in the 60s. In 1968, Norman Nie, along with colleagues at Stanford University, developed a statistical package (SPSS) for analyzing large volumes of social sciences data. The field of data analytics was thus born. The original SPSS manual, published in 1970, has often been described as “one of sociology’s most influential books” for allowing ordinary researchers to do their own statistical analysis.

Nothing, however, then could have been further from the truth. The first versions of SPSS (and SAS a competitor) were clunky software applications that were beyond the reach of most sociologists and researchers. The software ran on large mainframe machines made by companies such as Univac, Burroughs, Control Data Corp. Analytics had to be programmed on punch cards, in Cobol, Fortran, and required use of arcane Job Control Languages. The software ran in batch mode and the job took hours to complete.

Over the last 40 years, though, the progress in data analytics years has been nothing but transformative. Today, Excel with its free Data Mining plug-in can be used to develop complex models. Opensource (meaning free) data mining tools, such as Knime, R-Programming,offer capabilities comparable to high-end tools like SPSS, SAS. Models can be built using simple drag-n-drop interface, in just a couple of hours, with no programming. Mathematical models – Logistics Regression, Bayesian, Neural Networks – have been standardized. Model tuning is parameterized and no longer “art”. Anyone with a few undergraduate courses in statistics can master predictive analytics.

Analytics can now even be performed in the cloud. Microsoft, Google, Amazon, and others are pushing the technology boundary with inexpensive “cloud services” for analytics. All that you have to do is send data to the “cloud” and you get back the modeled data with the predictive scores. No need for software. No need for hardware. No need for data scientists.

What’s more these cloud services cost very little. For a few hundred dollars one could model the next non-donor campaign to develop a view (say) of the constituent’s propensity to give. Consider for example, Azure, Microsoft’s Machine Learning (a new buzz word in the IT industry). There is no contract, there is no lock-in, there is no set-up cost – you pay as you go. The per month seat (login) cost is $9.99, it cost $2 per hour of usage, and 50 cents for thousand API transactions.

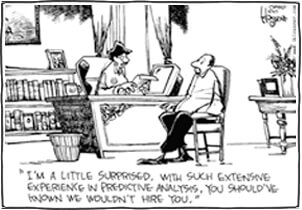

Predictive Analytics software tools are now a commodity. The value is not in the tools (i.e. Microsoft’s Machine Learning API) but in the domain knowledge. The ability, using these tools, to develop insights, to solve problems is where the value lies. For Advancement Professionals, the opportunity lies in leveraging these tools, to solve intractable problems such as donor churn, donor reactivation, new donor acquisition, college-drop out and other issues.

Let’s face it, despite much discussion about analytics, few institutions use it in the marketing campaigns. Well the barriers that may have held you back are being lowered. And it’s the right time to revisit these technologies and harness their power to further your Advancement goals.

But analytics do require rich repository of data. What attributes about your constituents you need to have? Do you have it? So let’s dwell into the world of Big Data, Small Data and Smart Data.

Insight brings the power of predictive analytics (sometimes called machine learning or data mining) to fundraising.

Acquire is a multi-channel, integrated communication platform designed to increase contact rates.

Cultivate improves donor retention with personalized communication that speaks to donor's interests & motivation for giving.